We’re

A Leading IT Services Company Focused on Building Next-Gen Products for Startups and MNCs.

- DEFINE

- DELIVER

- ITERATE

Our High-Level Partners

We at GKM IT are proud to partner with a diverse range of clientele across various industries, which is a testament to our IT excellence and quality standards.

Our IT Solutions Services

CTO as a Service

Pick from our service suite and connect with chief technology officers who are ready to share their expertise and insights with you.

- Virtual CTO

- Fractional/Part-Time CTO

- On-Demand CTO

- CTO Consulting

Frontend Development

Craft captivating web pages and applications using our custom front-end services that engage users visually and provide seamless user experiences.

- Front-End Design and Architecture

- Frontend Modernization

- CMS Design and Development

- Micro Frontend Development

- Single Page App Development

- Front-End Design System

- PWA App Development

- Front-End Performance Enhancement

- AMP App Development

Backend Development

Get a comprehensive range of customizable backend development solutions to upgrade your web and mobile applications.

- Backend Application Development

- Backend Security Solutions

- Application Admin Backend

- Cloud Backend Development

- API Integration and Development

- Cloud Migration

- Database Design and Management

- Microservices Architecture

- Server-side Scripting

- Performance Optimization

Mobile App Development

Experience our versatile and cutting-edge technology for mobile app development, tailored to meet your business objectives.

- Mobile App Conceptualization

- Mobile App Services Analytics

- Mobile App Consulting

- App Launch Strategy

- Mobile Application Development

- Hosting and Deployment

- Localization

- Maintenance and Support

DevOps Solutions

Leverage our DevOps solutions and our decade-plus experience in automation and operational delivery for seamless infrastructure and deployment with top-notch software development services.

- CI/CD Services

- Continuous Monitoring

- Infrastructure Management

- DevOps Consulting

- Configuration Management

- DevSecOps Services

- Continuous Testing

Software Testing as a Service

Our software testing ensures bug-free results across diverse domains. To expedite the testing process, we also offer automation testing services.

- Functional Testing

- Performance Testing

- Automation Testing

- Compatibility Testing

- API Testing

- Security Testing

- Mobile App Testing

- Usability Testing

- Regression Testing

- Localization Testing

UI/UX Design

We create visually stunning, user-centric, and cost-effective UI/UX designs that captivate users throughout their journey. Our UI/UX design process is crafted to develop solutions that resonate with customers for tangible business results.

- User Experience Design

- Custom UI/UX Design

- User Interface Design

- UI/UX Consulting

- Prototyping

- Microinteractions & Animations

- UI/UX Revamp

- Design Systems

- UX Writing

- UX Audit

Digital Marketing Service

Amplify your digital presence and reach your customers with our comprehensive digital marketing services. As a leading digital marketing consultancy, we are dedicated to delivering exceptional value to your business.

- Pay Per Click

- Online Reputation Management

- Search Engine Optimization

- UI/UX Design

- Social Media Marketing

- Graphic Design

- Content Marketing

- Branding

- Content Writing

- Email Marketing

Web3 Solutions

Adapt to the future of Web3 solutions with blockchain, decentralized applications, and next-gen internet protocols to amplify your online presence.

- Blockchain Development

- Web3 Consulting

- Decentralized Applications (DApps) Development

- Interoperability Solutions

- Smart Contract Development

- Web3.0 Security

- Web3 Integration

- Tokenization Services

- NFT Solutions

- Web3 Education and Training

SAP Implementation Service

GKM IT is your destination for global IT solutions, consulting, and seamless integration of SAP ERP systems. We specialize in meticulously selecting, implementing, and maintaining custom SAP solutions.

- Expert SAP Project Management

- Seamless SAP Implementation, Upgrades, and Rollouts

- Comprehensive SAP Support across Modules

- Effortless SAP Platform Mergers and Demergers

Recruitment Process Outsourcing

We provide tailored RPO solutions to meet your resource requirements with dedicated support in recruitment services. Our robust suite of RPO services can be customizable to enhance your organization’s recruitment efforts.

- Permanent Recruitment Service

- Talent Sourcing Solutions

- Contract HR Staffing

- Onboarding Excellence

- Interview Concierge Services

- Build Your Own Team

Global IT Solutions: Industry Specializations

IT Service Provider for Healthcare Solutions

Medical Equipment Management and Technical Support

Our top-tier professionals excel in the management and upkeep of medical equipment, providing reliable tech support to ensure the uninterrupted functioning of your healthcare facility.

Cutting-Edge Machine Learning Algorithms

Harnessing the power of advanced ML algorithms, we can bring revenue growth to the healthcare industry through our IT software solutions. Our innovative approach employs data analytics to optimize operations and boost revenue streams.

Efficient Drug Manufacturing

We streamline the manufacturing of essential drugs, optimizing inventory management for pharmaceutical companies in the healthcare sector.

HIPAA Compliance and Data Security

Our team is HIPAA certified, ensuring data security and privacy protocols. Your sensitive healthcare data remains with us, safeguarding patient confidentiality. Robust techniques like mock data and virtual machines maintain data integrity and compliance with HIPAA regulations.

AI/ML Predictive Solutions

Our expertise in IT software solutions extends to AI/ML applications. With AI/ML, we offer valuable insights to healthcare providers that can enhance their patient care.

Built By GKM IT

Dayatani

Dayatani

IndiGG

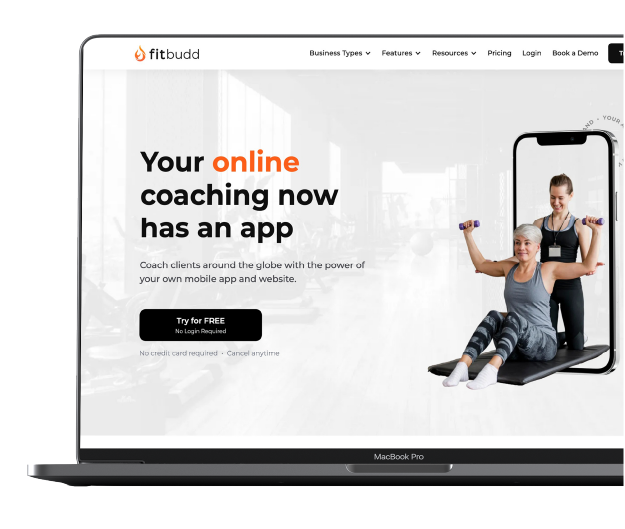

FitBudd

Built By GKM IT

Dayatani

Dayatani, an AgriTech startup, increased its weekly users and streamlined its business processes with tech support.

Dayatani

Dayatani, an AgriTech startup, increased its weekly users and streamlined its business processes with tech support.

IndiGG

Here’s how an NFT-based P2E gaming platform, IndiGG, amplified its downloads to 10K+ with average user ratings of 4.8/5.

FitBudd

Our Clients

GKM IT, leading IT company, led a pivotal EMEA project, delivering a responsive web app for quotation management. Their results-driven approach, agile expertise, remarkable ability to understand and translate needs into efficient solutions, ensured on-time, on-budget delivery.

Reliable and innovative, GKM IT consistently delivers top-notch technical support in Ruby, HTML 5, and Blockchain advisory. Their hardworking team consistently brings fresh and inventive ideas to the table. I wholeheartedly recommend them - a perfect 10 out of 10.

GKM IT, an IT solutions provider, developed the website for the MIT Media Lab India Initiative. Great team, incredibly responsive. It was amazing fun working with Jeetesh. We hope to continue our relationship in the future!

Guided by Jeetesh, the GKM IT team excels in innovative consultation and product development. Their commitment surpasses expectations, offering commendable expertise in Ruby and Blockchain, along with unparalleled technical and operational support.

Partnering with GKM IT, IT software development company, has been a continuous success, their tech alignment with our goals is impeccable. Their holistic approach to understanding user behavior consistently delivers valuable insights. Best wishes for ongoing success and eager for more collaborations ahead!

GKM IT has empowered our startup with cost-effective solutions, flexible support, and swift turnarounds, enabling sustainable scalability. We look forward to a continued and mutually beneficial relationship, wishing them success in their future endeavors.

Vashistha and Jeetesh from GKM IT played a pivotal role in crafting and implementing web interfaces and CRM systems for all three of our initiatives. Their professionalism, creativity, attention to detail, and timely delivery have been indispensable to our progress.

Collaborating with GKM IT, IT solutions company, is like having an in-house team – their skilled professionals bring exceptional business insight, work ethic, and elevate both the product value and project vision seamlessly.

Choosing Jeetesh and the GKM IT team was a pivotal decision for our digital messaging app. They are true collaborators, turning our ideas into a digital solution with a focus on user experience. Their constant availability and support made overcoming challenges seamless. Wishing GKM IT continued success!

Collaborating with Jeetesh and the GKM IT team was pivotal. Jeetesh's leadership transformed our budding professionals into a focused, communicative team, allowing me to mentor and innovate while ensuring the maturation of Reshotel's technology wing.

GKM IT, IT service provider, delivered an exceptional e-commerce platform, streamlining sales and operations. The user-friendly admin section and proactive support made product management a breeze. Daily updates for our fresh farm produce became effortlessly smooth. Highly recommended!

Kudos to the exceptional team at GKM IT! The seamless website and flexible products have transformed our work effortlessly. No glitches since day one, saving us time and making work a breeze. Grateful for the invaluable partnership – looking forward to a long association! Cheers!

GKM IT, IT software development company, transformed our visibility with a stunning, user-friendly website that effectively showcased our complex work. Their blend of aesthetic flair, technical expertise, and client-focused approach exceeded our expectations. Best wishes to the talented team!